Interesting https://medium.com/@jsoverson/was-rust-worth-it-f43d171fb1b3

Reforming Unix : https://github.com/Ericson2314/baccumulation/blob/main/reforming-unix.adoc

Managing big C language codebases can be challenging but is definitely possible : this very interesting post talks about curl https://daniel.haxx.se/blog/2023/12/13/making-it-harder-to-do-wrong/

Agile has failed ? I don’t think so : https://medium.com/developer-rants/agile-has-failed-officially-8136b0522c49 Anything applied as a religion is doomed to fail and the same is for Agile. You can’t take any methodology and apply it “as is” to a company or project or dev-team; you need to adapt it and not make the company/team adapt to it.

What I hope is that we don’t trow away the good ideas of Agile (iterations/continuous delivery, attention to technical excellence, simplicity (avoiding over engineering) just to mention a few).

State of the developer ecosystem : https://www.jetbrains.com/lp/devecosystem-2023/

Margareth Hamilton, the first software engineer story : https://levelup.gitconnected.com/the-untold-story-of-the-errorless-code-written-by-a-woman-that-took-man-to-the-moon

Avoid Try/Catch https://betterprogramming.pub/try-catch-considered-harmful and remember what google recommends about exceptions (and c++ in general)

Thanks Mafe : Maniac by Benajmin Labatut, see the Alpha-Go documentary

Agile roadmaps : Now, Next, Later approach https://developingskills.substack.com/p/now-next-later

Reasons why you burnout swe : https://engineercodex.substack.com/p/how-to-burnout-a-software-engineer My list in order of priority : 1. don’t ship code (there is nothing worse than working months on something that is not going to prod or is lingering in the deploy queue) 2. Not trusting your engineers by telling them in fine detail how to do things. 3. Lack of Recognition (not reward, about the difference).

So google uses design documents to describe design of new components : https://www.industrialempathy.com/posts/design-docs-at-google/

From a friend of mine substack, Chris Hedges talking about war :

“But these words give me a balm to my grief, a momentary solace, a little understanding, as I stumble forward into the void.”

Readability (and thus Maintainability) is the most important attribute of a code base and Google knows it : https://engineercodex.substack.com/p/how-google-writes-clean-maintainable

An open source 3D universe simulator with support for more than a billion objects : https://zah.uni-heidelberg.de/gaia/outreach/gaiasky/ based on data from Gaia mission

Resources on Microservices https://blog.quastor.org/p/scaling-microservices-doordash-6c35

How Diffusion Models work i.e. how does an AI generate images : https://developer.nvidia.com/blog/improving-diffusion-models-as-an-alternative-to-gans-part-1/

Watch out for Thundering herds

Engineering Metrics : https://hybridhacker.email/p/diving-into-engineering-metrics

My next read : http://lawsofsimplicity.com/ John Maeda

System design and the cost of architectural complexity : https://dspace.mit.edu/handle/1721.1/79551

Simplicity was the key for Instagram backend (in 2011) : https://engineercodex.substack.com/p/how-instagram-scaled-to-14-million

Simplicity mindset : https://betterprogramming.pub/3-tips-to-adopt-a-simplicity-mindset-when-designing-software-711b95328062

Fastly supports go on Edge : https://www.fastly.com/blog/announcing-standard-go-support-for-fastly-compute

Go WASI support : https://go.dev/blog/wasi

Any company providing support for a product would want to have something like this https://www.fixie.ai/

Meta Launches AI Code Writing tool https://www.theverge.com/2023/8/24/23843487/meta-llama-code-generation-generative-ai-llm : you can test it here : https://labs.perplexity.ai/

Whats App : https://newsletter.systemdesign.one/p/whatsapp-engineering

Photographs that are like films https://www.rencontres-arles.com/en/expositions/view/1447/gregory-crewdson

I find it so true : https://intenseminimalism.com/2013/conways-law/

Jerome Lanier on music and what Reality is in the end : https://www.newyorker.com/culture/the-weekend-essay/what-my-musical-instruments-have-taught-me

We lost Kevin (The Condor) Mitnick ( https://www.dignitymemorial.com/obituaries/las-vegas-nv/kevin-mitnick-11371668 ) : TCP is a much more secure protocol also thanks to him.

Yoshua Bengio (CA) and John Bunzl (UK), moderated by Nico A. Heller on strengths and limitations of current artificial intelligence, why it may become a dangerous instrument of disinformation, why superintelligent AI may be closer (years) than most previously expected (decades) and how this could yield to catastrophic outcomes – from AI-driven wars to the extreme risk of extinction. https://www.youtube.com/watch?v=07c1ZRUQOeY. Notes, general concepts from Bengio talk :

AI currently perceive the world and make sense of it with images, sound and text, generating content in all 3 areas.

Current systems are not at the level of human intelligence, they master what psychologist call system 1 intelligence (intuition) : react to any question/context, no reasoning (or little reasoning) – arithmetic : simple operations with numbers ok, more complex (of the type we will need paper and pencil) they make mistakes.

System 2 intelligence : explicit reasoning, you can plan, imagine. Ex:

driving left hand roads after having driven right hand all life. We take 1 hour to adapt because we don’t use intuition but reasoning. AI will get there : how much time will take to bridge the gap between s1 and s2 ? nobody knows, could be close or take 10 years.

Training data currently needs to be filtered to remove data that appears to be insulting, homophobic or racists, dangerous, inadequate. AI will get there too to avoid the need of preparing training material.

Machines that are as intelligent as we are will be inevitably more intelligent than us because they are machines : immortal, don’t sleep, can exchange info at high speeds like if they were a huge brain. Humans have culture to try to simulate this.

- pure intelectual power, completely detached from the goal. The goal will make the difference between a “good” AI and an “evil” one.

- humans cannot turn off compassion (or just most people can’t) as it has been hardwired into us by evolution; machines can

Stating a goal in a precise way is probably impossible so even AI with good goals might behave evil.

Goals are not expressable, we can only give partial specifications

We’ll get to the point where the game will be : who’s AI is bigger/better/faster ?

Most important thing to reduce the probability of bad behavior connected to AI is to reduce the actors, materials, information, proliferation (like we did for atomic bombs)

Regulatory frameworks are necessary.

Go : inside map implementation https://www.youtube.com/watch?v=Tl7mi9QmLns and https://phati-sawant.medium.com/internals-of-map-in-golang-33db6e25b3f8

Go 10 years after : https://blog.carlmjohnson.net/post/2023/ten-years-of-go-good-bad-meh/ and why we can live without subclassing https://hynek.me/articles/python-subclassing-redux/

Extreme readability https://www.moderndescartes.com/essays/readability/

AI can’t create software, only code : https://stackoverflow.blog/2023/06/26/the-hardest-part-of-building-software-is-not-coding-its-requirements

Some WASM Stuff :

– cloudflare wasm runtime https://github.com/cloudflare/workerd

– wasmtime runtime : https://github.com/bytecodealliance/wasmtime

– wasi-libc : still no thread support (june 2023) https://github.com/WebAssembly/wasi-libc

Ping Pong Programming : TDD + Pair Programming : https://www.agileconnection.com/article/ping-pong-programming-enhance-your-tdd-and-pair-programming-practices

Using AI in Ping Pong programming : https://www.mechanical-orchard.com/post/can-ai-play-the-tdd-pairing-game

AI Technology map : https://a16z.com/2023/06/20/emerging-architectures-for-llm-applications/

You build it, you own it : https://blog.alexewerlof.com/p/you-build-it-you-own-it

AI generated sort algorithms make it into LLVM/C++ https://www.nature.com/articles/s41586-023-06004-9

Superfast hash https://github.com/wangyi-fudan/wyhash

Google leaked internal document on AI : https://www.semianalysis.com/p/google-we-have-no-moat-and-neitherkkjhnmmmm

Compression algorithms benchmarks : https://github.com/inikep/lzbench

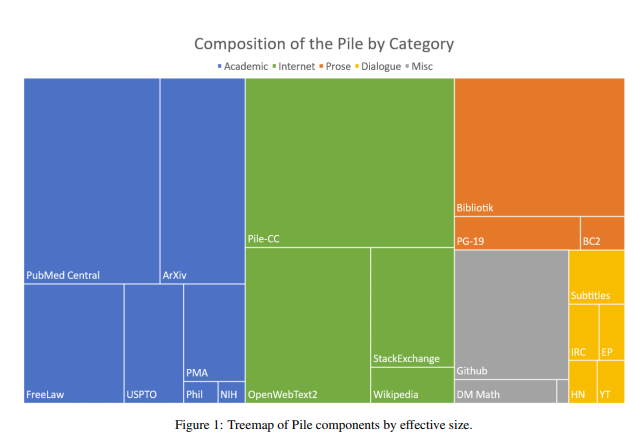

What’s inside training data for LLM https://arxiv.org/pdf/2101.00027.pdf

Cache Optimization Models and Algorithms : https://arxiv.org/pdf/1912.12339.pdf

ChatGPT Is a Blurry JPEG of the Web https://twitter.com/paulborile/status/1647640363555758083

Ordinals https://docs.ordinals.com/overview.html

NO Pause on AI development : https://medium.com/enrique-dans/a-pause-on-the-development-of-ai-its-not-going-to-happen-d4f894816e82

Cheatsheet for golang test, benchmarks, profiling https://github.com/samonzeweb/profilinggo

Yet another browser https://mullvad.net/it/browser promising to minimize tracking and fingerprinting.

everything cli https://www.commandlinefu.com/commands/browse

Amazon Is Making a New Web Browser ? https://gizmodo.com/amazon-prime-new-web-browser-survey-1850224922?utm_source=tldrnewsletter

Interview with OpenAI’s Greg Brockman: GPT-4 isn’t perfect, but neither are you https://techcrunch.com/2023/03/15/interview-with-openais-greg-brockman-gpt-4-isnt-perfect-but-neither-are-you/?utm_source=tldrnewsletter&guccounter=1

On jpeg-XL https://cloudfour.com/thinks/on-container-queries-responsive-images-and-jpeg-xl/#jpeg-xl-the-holy-grail-image-format

https://cloudinary.com/blog/the-case-for-jpeg-xl

On crypto and thieves :

“In February 2022, a hacker stole 120,000 wrapped Ethereum from Wormhole, a cross-blockchain bridge” https://newsletter.mollywhite.net/p/oasis-defi-centralization – subscribe to Molly White newsletter for unbiased crypto news.

B Corporation Certification : https://en.wikipedia.org/wiki/B_Corporation_(certification) “As of September 2022, there are 5,697 certified B Corporations across 158 industries in 85 countries.” https://www.bcorporation.net/en-us/

https://www.positive.news/economics/five-trends-sustainable-business/

https://lumalabs.ai/ : acquire 3d assets anywhere with a phone

https://roaringbitmap.org/ compressed bitmaps

Code RED in google : https://medium.com/@ignacio.de.gregorio.noblejas/can-chatgpt-kill-google-6d59742ee635

Node.js is aging like milk https://javascript.plainenglish.io/node-js-is-aging-like-milk-now-whats-next-a4e726ae668f :

Ryan Dahl, creator of Node.js moved to Go.

TJ Holowaychuk, creator of Express.js framework moved to Go.

Boston dynamics showing off https://youtu.be/-e1_QhJ1EhQ

Chat GPT4 talk https://www.theverge.com/23560328/openai-gpt-4-rumor-release-date-sam-altman-interview

Fyne IDE, currently work in progress https://github.com/fyne-io/defyne

1995 paper by the Niklaus Wirth “A Plea for Lean Software” https://cr.yp.to/bib/1995/wirth.pdf